Policy Enforcement#

Subsystem Goal#

Simply put, we want to prevent tenants from doing things they shouldn't be able to do that will either compromise the platform, impact other tenants, or increase unnecessary costs.

Components in Use#

While working on this subsystem, we will introduce the following components:

- Gatekeeper - Gatekeeper wraps the Open Policy Agent engine and makes it more accessible in a Kubernetes environment by allowing us to define policies using Kubernetes objects

- gatekeeper-policies - our custom policies and templates being applied into the cluster packaged as a deployable Helm chart

- landlord - our helm chart that creates the policies based on the configuration of each tenant

Background#

Understanding Admission Controllers#

While Kubernetes has a fairly robust RBAC system, it is still based on the ability to perform actions on specific resources. As such, it isn't able to help us with many scenarios, including the following:

- While tenants should be able to create Services, how can we prevent them from defining their own LoadBalancer or NodePort Services?

- While tenants should be able to define Ingress objects, how do we prevent them from defining an Ingress for a domain that actually belongs to another tenant?

- How can we prevent tenants from running privileged pods, mounting host volumes, and other potentially vulnerable actions defined in the Pod Security Standards?

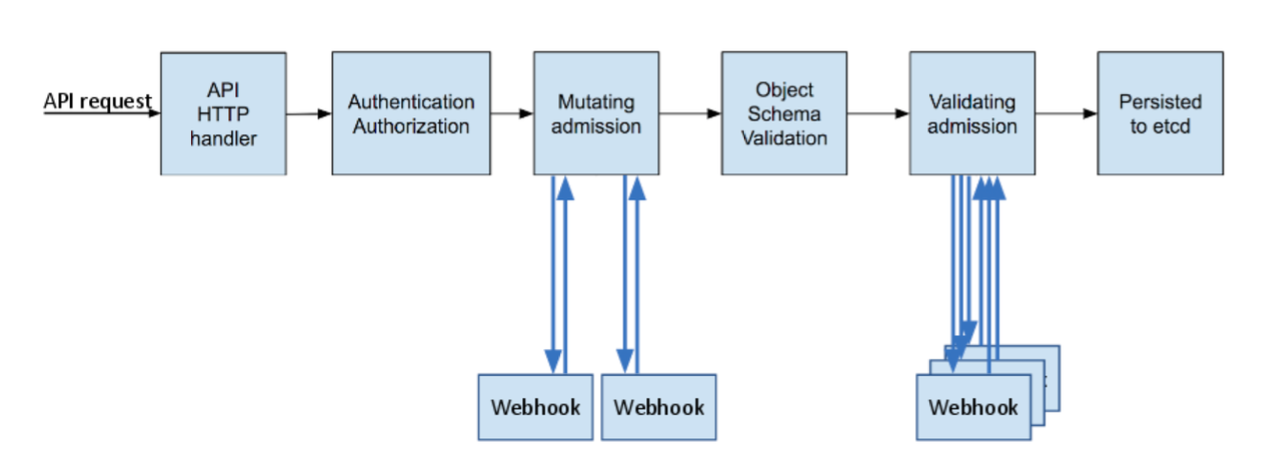

Using admission controllers (and another guide), we can deploy services that sit in the API request process and either mutate an incoming object or validate it to determine if it should be accepted and persisted.

Rather than defining our own webhook services and policy engine, we decided to leverage Gatekeeper.

Gatekeeper and OPA#

Gatekeeper is an open-source project that wraps the Open Policy Agent (OPA) engine and provides the ability to define policies without worrying about the semantics of the admission controller.

OPA provides the ability to define policies in a language called Rego. While Rego can take a while to understand, you can read it as a series of truth statements or declarations. As long as the statements are true, execution will continue. For example:

package block_load_balancer_services

violation[{"msg": msg}] {

input.review.kind.kind == "Service"

input.review.object.spec.type == "LoadBalancer"

msg := sprintf("LoadBalancer Services are not authorized - %q", [input.review.object.metadata.name])

}

The input.review object comes from the payload received by the admission controller,

specifically the .request portion of the payload

(see an example here).

With the declared assertions, a message will only be set if the incoming object

is for a Service that has spec.type set to LoadBalancer. With Gatekeeper,

any messages become validation errors and prevent the object from being stored.

In addition, Gatekeeper provides the ability to provide parameters to the policy, letting you use the same policy for multiple use cases (such as authorizing the hostnames allowed for a tenant to use). We'll see a sample of doing that below.

Deploying it Yourself#

Installing Gatekeeper#

-

Install Gatekeeper by using its Helm chart. This will install Gatekeeper into a namespace named

platform-gatekeeper-system.helm repo add gatekeeper https://open-policy-agent.github.io/gatekeeper/charts helm repo update helm install gatekeeper/gatekeeper --name-template=gatekeeper --namespace platform-gatekeeper-system --create-namespaceAfter a moment, you should see a few pods running in the

platform-gatekeeper-systemnamespace.> kubectl get pods -n platform-gatekeeper-system NAME READY STATUS RESTARTS AGE gatekeeper-audit-5f66c86485-g66t9 1/1 Running 0 41s gatekeeper-controller-manager-b8fcdfb56-5z99d 1/1 Running 0 41s gatekeeper-controller-manager-b8fcdfb56-wp4bj 1/1 Running 0 41s gatekeeper-controller-manager-b8fcdfb56-xnx67 1/1 Running 0 41s

A simple example#

-

Now that Gatekeeper is installed, let's define a simple

ConstraintTemplate. This will simply block allServicewith aspec.typeofLoadBalancer. We'll create a more sophisticated policy in the next section.Run the following command to define our ConstraintTemplate.

cat <<EOF | kubectl apply -f - apiVersion: templates.gatekeeper.sh/v1beta1 kind: ConstraintTemplate metadata: name: blockloadbalancerdemo spec: crd: spec: names: kind: BlockLoadBalancerDemo targets: - target: admission.k8s.gatekeeper.sh rego: | package block_loadbalancers_demo violation[{"msg": msg}] { input.review.kind.kind == "Service" input.review.object.spec.type == "LoadBalancer" msg := sprintf("LoadBalancer not permitted on Service - %v", [input.review.object.metadata.name]) } EOFOnce defined, Gatekeeper will actually create a new customer resource using the name specified in

spec.crd.spec.names.kind. This is how we actually apply the policy. -

Now, let's create a

BlockLoadBalancerDemoobject and ensure noServicein our tenant namespace can be of the load balancer type. -

Now, let's try to create a LoadBalancer Service in the

sample-tenantnamespace. We should see that the request is denied!cat <<EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: name: lb-service namespace: sample-tenant spec: type: LoadBalancer selector: app: sample ports: - port: 80 EOFWhen you run it, you should see the following error:

Error from server ([sample-tenant] LoadBalancer not permitted on Service - lb-service): error when creating "STDIN": admission webhook "validation.gatekeeper.sh" denied the request: [sample-tenant] LoadBalancer not permitted on Service - lb-serviceOur validation worked!

A parameterized policy#

Now, let's create a slightly more sophisticated policy that includes parameters. This is especially useful when we limit the Ingress names a namespace is authorized to use.

For this example, we're going to do a simplified version of our Ingress policy. The real one supports wildcards in the parameters, but makes the policy a lot more complicated. So... we'll keep it simple here.

-

Run the following command to define a new ConstraintTemplate.

cat <<EOF | kubectl apply -f - apiVersion: templates.gatekeeper.sh/v1beta1 kind: ConstraintTemplate metadata: name: authorizedingresshost spec: crd: spec: names: kind: AuthorizedIngressHost validation: # Schema for the "parameters" field openAPIV3Schema: properties: domains: type: array items: string targets: - target: admission.k8s.gatekeeper.sh rego: | package authorized_ingress_host violation[{"msg": msg}] { input.review.kind.kind == "Ingress" host := input.review.object.spec.rules[_].host valid_ingress_host := input.parameters.domains[_] not host == valid_ingress_host msg := sprintf("Unauthorized host on Ingress - %q", [host]) } EOFYou might have noticed the new

spec.crd.spec.validationwhich outlines the additional properties that our custom resource should accept. -

Now, let's apply our new policy to limit the

sample-tenantnamespace to only use thesample-app.localhosthost (which we used in the last step). -

Now, let's create an Ingress that should work:

cat <<EOF | kubectl apply -f - apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: valid-ingress namespace: sample-tenant spec: rules: - host: sample-app.localhost http: paths: - path: / pathType: Prefix backend: service: name: sample port: number: 80 EOFThat should work! But doesn't if you went thru the gitops steps :)

-

Now, let's make an invalid Ingress using an invalid name. It should fail!

cat <<EOF | kubectl apply -f - apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: invalid-ingress namespace: sample-tenant spec: rules: - host: invalid.localhost http: paths: - path: / pathType: Prefix backend: service: name: sample port: number: 80 EOFYou should see an error message!

Deploying the Platform Policies locally#

If you want to test the policies locally, you can install the Helm chart that defines the policies yourself!

-

Install the Helm chart using the following commands:

-

Now, you can use any of the templates to test the policies being used in the platform. For now, let's define a policy that limits the use of privileged pods in the

sample-tenantnamespace.cat <<EOF | kubectl apply -f - apiVersion: constraints.gatekeeper.sh/v1beta1 kind: PssPrivilegedPod metadata: name: sample-tenant spec: match: namespaces: - sample-tenant kinds: - apiGroups: [""] kinds: ["Pod"] - apiGroups: ["apps"] kinds: ["DaemonSet", "ReplicaSet", "Deployment", "StatefulSet"] - apiGroups: ["batch"] kinds: ["Job", "CronJob"] EOFIf this command fails indicating

no matches for kind "PssPrivilegedPod", wait a moment and try again. Gatekeeper simply hasn't completed processing the ConstraintTemplate to create the custom resources. -

Now, if we try to create a Pod (or anything that uses the Pod spec) with a privileged container, it'll be blocked! Let's test that out.

cat <<EOF | kubectl apply -f - apiVersion: v1 kind: Pod metadata: name: sample-privileged-pod namespace: sample-tenant spec: containers: - name: nginx image: nginx securityContext: privileged: true EOFAnd... it should fail!

Error from server ([sample-tenant] Container 'nginx' of Pod 'sample-privileged-pod' should set 'securityContext.privileged' to false): error when creating "STDIN": admission webhook "validation.gatekeeper.sh" denied the request: [sample-tenant] Container 'nginx' of Pod 'sample-privileged-pod' should set 'securityContext.privileged' to false

What's next?#

Now that we have policy enforcement plugged in, how do we actually grant access to the tenant resources to the proper users? In the next section, we'll talk about user authentication.

Go to the User Authentication subsystem now!

Common Troubleshooting Tips#

Coming soon!